You’ve just seen an ad for a real estate firm and you wonder: How did a start-up afford to have Elon Musk appear in its commercial?

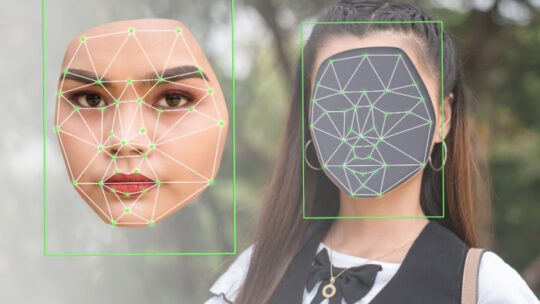

In fact, it wasn’t the controversial billionaire you saw. Instead, it was a digital reproduction of Musk, a deepfake made of video and sound clips, along with photos, digitally sewn together. The result looks and sounds almost exactly like Musk.

Indeed, Musk isn’t the only celeb who didn’t authorize an advertiser to put his likeness in an ad.

More than advertising, deepfake technology also can create bogus people who interact with actual people in real-time, or should we say deepfake-time?

As crisis PR pros know, effective messaging and corporate reputation depend, in large part, on earning audience members’ trust. Dropping deepfakes into the equation certainly complicates PR maxims about trust. Accordingly, deepfake technology is making the battle against disinformation more formidable.

Finding Deepfakes

As such, learning about deepfakes and including them in your social media-monitoring efforts should be part of your agenda, particularly if you represent high-profile individuals or business leaders. And include monitoring of so-called fringe sites like Reddit.

Unfortunately, monitoring social media, even with sophisticated systems, is only a start. That's because deepfakes “are beginning to pop up everywhere,” says Thomas Frank, chief creative officer, kglobal.

For example, advanced deepfakes can impersonate individuals during voice and video calls, says Dr. Johannes Ullrich, dean of research at SANS Technology Institute. In addition, the technology can help criminals impersonate someone and then use their creation to access financial and other systems. The possibilities for stealing money and data seem endless.

Identifying Deepfakes

Fortunately, there are a few low-tech ways communicators can battle deepfakes. The first involves human monitoring. For example, sources such as the “Verification Handbook” help PR pros spot disinformation, including deepfakes and other emerging media technologies.

The Handbook’s list identifies ways of spotting deepfakes:

- Potential distortions at the forehead/hairline, or as a face moves beyond a fixed field of motion

- Lack of detail on the teeth

- Excessively smooth skin

- Absence of blinking

- A static speaker without real head movements or range of expression

- Glitches when a person turns from facing forward to sideways

Aside from video, manipulated images are becoming a growing threat because they are easier to produce. To catch manipulated images, you can do reverse images searches using Google or tineye.com.

A second low-tech option calls for using common sense, Frank of kglobal says.

“If you find it odd that someone says something out of character,” slow the video and watch details closely, he says. “Unnatural blinking, blurry or unstable features, lack of emotion, awkward body language, incorrect coloring or lighting and distorted audio” are telltale signs.

Similarly, Ullrich stresses the importance of companies “presenting a consistent image.” This makes it easier for people to spot oddities and “identify deepfakes.”

More Resources

Last year, PRSA unveiled Voices4Everyone, part of which offers communicators myriad tools for combating disinformation. An architect of Voices’ disinformation section, Michael Cherenson, EVP, Success Communications Group, says the most powerful tool PR has for countering deepfakes and disinformation is found at the profession's core.

“Building and maintaining trust with key publics and stakeholders…will enable…organization[s] to counter…manipulation,” he says.

However, no matter how trustworthy a brand, “lies are almost impossible to un-see or hear,” Cherenson adds. As such, “quick, clear, factual and passionate” response is necessary, especially since it’s “often difficult to slow the spread once the fakes have gone viral.”

In It Together

The consensus is industries must work in concert against the deepfake threat. Fortunately, major technology companies already are collaborating, Frank says. For example, Facebook, Microsoft and others established a deepfake detection challenge two years ago.

These collaborations soon will go from nice-to-have to imperative, Frank believes. “Google, YouTube [and] Meta will need to be able to identify unauthentic content or they will lose user trust.”

As with many other technologies, countermeasure designers are battling bad actors, who are developing tools to defeat them, Frank says.

Better Security

As such, Ullrich believes embedding greater security protocols in products is the best way of deterring impersonation programming. Call backs and requiring that multiple individuals “authorize critical actions can help,” he says

Cherenson believes addressing deepfakes will require an “all-of-society” approach, including “new laws and regulations, enforcement, widespread news literacy and inoculation to build collective cognitive muscles.”

PR Leadership

PR should lead on this, he adds. “Playing whack-a-mole will NOT resolve these issues,” Cherenson says. “The profession must start playing offense and address the larger threats.”

There’s a bright side to deepfakes, however. For instance, Frank cites “harmless” deepfakes, “such as those used in mainstream media, like films. He paints a scenario where one day users will “choose whatever actor” they want to play a lead in a film. “Imagine!”

He also envisions legitimate, practical uses for deepfake technology, such as creating ‘actors’ who then appear in training videos.